Introduction To Web Scraping Using Selenium

Content

Massive USA B2B Database of All Industrieshttps://t.co/VsDI7X9hI1 pic.twitter.com/6isrgsxzyV

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Web Scraping Using Selenium And Python

This is as a result of it has excellent documentation and a friendly consumer group. Most internet scrapers must have used BeautifulSoup before heading over to Scrapy. The device isn't complex and makes it easier for you to transverse an HTML document and decide the required data. Scrapy is the software for developing complicated internet crawlers and scrapers as you can create a good number of employees, and each of them will work effortlessly.

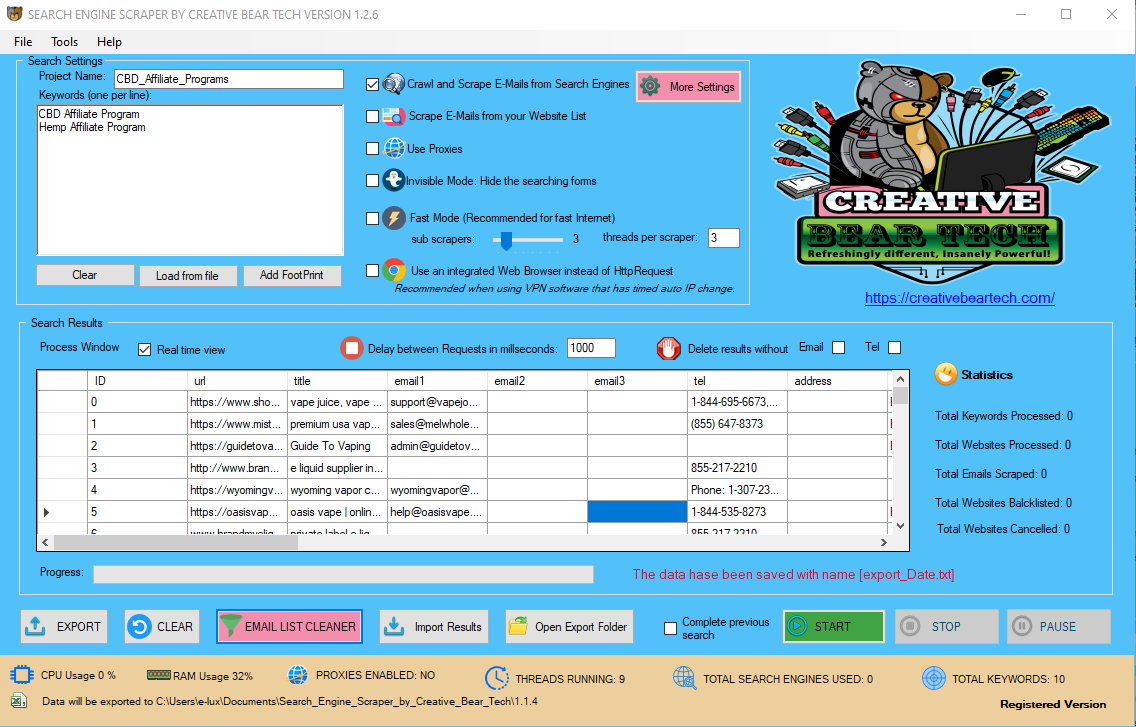

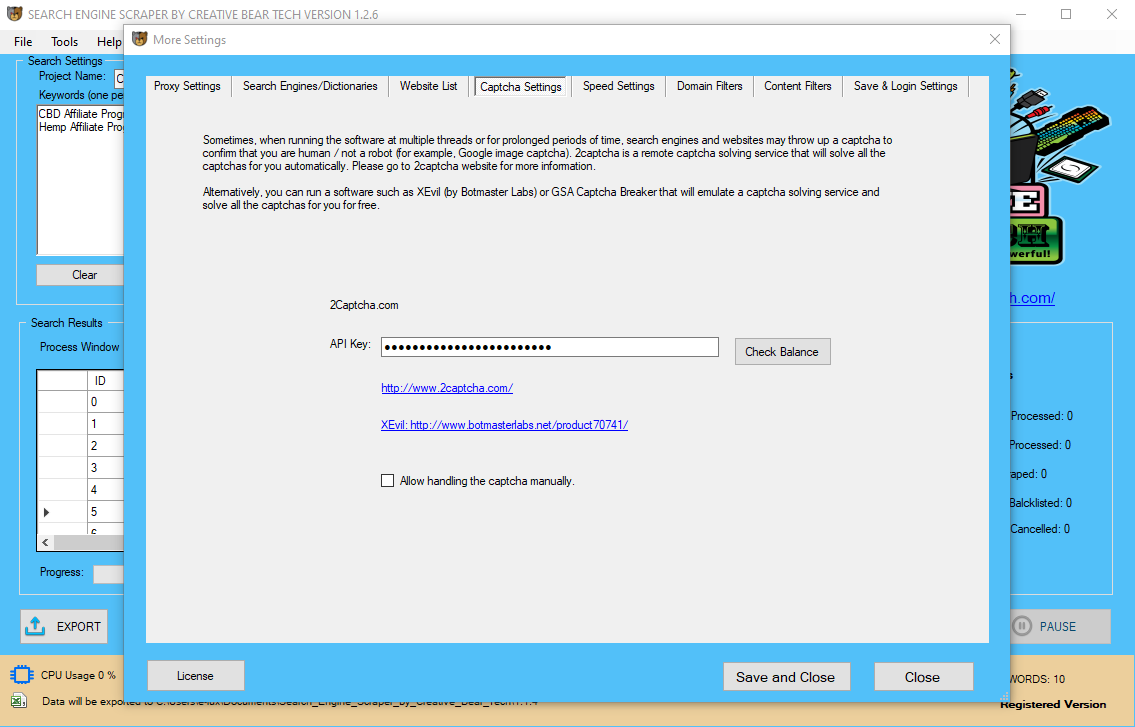

Search Engine Scraper and Email Extractor by Creative Bear Tech. Scrape Google Maps, Google, Bing, LinkedIn, Facebook, Instagram, Yelp and website lists.https://t.co/wQ3PtYVaNv pic.twitter.com/bSZzcyL7w0

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Locating Elements

BeautifulSoup, similar to Scrapy, is an open-supply device and used for net scraping. However, not like Scrapy, which is an online crawling and scraping framework, BeautifulSoup is not. BeautifulSoup is a module that can be utilized for pulling data out of HTML and XML documents. BeautifulSoup is a beginner-friendly tool that a beginner can hit the ground running with it.

Find_element

However, it and different scraped knowledge could have been stored in a flat file or a database as well. The first alternative I needed to make was which browser I was going to tell Selenium to use.

Webelement

The important use case of it's for autmating web purposes for the testing purposes. In our case, I used it for extracting all of the urls corresponding to the recipes. One of the biggest advantages of using the Scrapy framework is that it is constructed on Twisted, an asynchronous networking library. What this implies is that Scrapy spiders don’t have to wait to make requests separately. Instead, they'll make multiple HTTP requests in parallel and parse the data as it's being returned by the server.

Waiting For An Element To Be Present

To begin, we'll need the listing of all quotes that we would described above. On this step, nevertheless, we'll not be enclosing it in a len() operate as we'd like particular person elements. On inspecting every quote factor, we observe that each quote is enclosed inside a div with the category name of quote. By running the directive driver.get_elements_by_class("quote")we get a list of all elements within the page exhibiting this sample. In addition to this, you will want a browser driver to simulate browser sessions.

Executing Javascript

However, visually displaying internet pages is mostly unnecessary when web scraping results in greater computational overhead. Furthermore, projects generally are run on servers without displays. Headless browsers are full browsers without a graphical person interface. They require much less computing assets and might run on machines with out displays. In the full-time case, dedicated internet scrapers could also be answerable for maintaining infrastructure, constructing initiatives, and monitoring their performance. Though most skilled net scrapers fall into the first class, the number of full-time internet scrapers is growing. Search EnginesSearch engines can be scraped to trace knowledge on the positioning of results for certain keywords over time. Marketers can use this information to uncover alternatives and observe their performance. Researchers can use this data to trace the recognition of particular person outcomes, similar to manufacturers or merchandise, over time. We aren't just getting pure titles however we're getting a selenium object with selenium elements that embrace the titles. Within the folder we created earlier, create a webscraping_example.py file and include Free Online Email Extractor the next code snippets. ChromeDriver — provides a platform to launch and perform duties in specified browser. Firefox, for example, requires geckodriver, which must be put in earlier than the beneath examples could be run. One important thing to contemplate right here, though, ‘.find_elements’ (plural) will provide an inventory of elements, so merely including ‘.text’ to the tip of your component shall be unsuccessful. Like some other Python object, easy record comprehension will address this issue. This article assumes that the reader is acquainted with fundamentals of Ruby and of how the Internet works. Web scraping is a helpful follow when the information you want is accessible via a web utility that doesn't provide an acceptable API. It takes some non-trivial work to extract data from fashionable net functions, however mature and well-designed tools like requests, BeautifulSoup, and Selenium make it worthwhile. It fetches net pages from the server with out the help of a browser. You get precisely what you see in "view page supply", and you then slice and dice it.  Because of this, many libraries and frameworks exist to assist within the improvement of tasks, and there is a large group of builders who at present construct Python bots. This makes recruitment of developers easier and in addition means that assist is simpler to get when needed from websites similar to Stack Overflow. Besides its popularity, Python has a comparatively easy studying curve, flexibility to accomplish a wide variety of tasks simply, and a transparent coding type. Some web scraping tasks are better suited toward using a full browser to render pages. This could imply launching a full web browser in the same means a regular person might launch one; web pages which are loaded on visible on a display. There are a number of choice parameters you'll be able to set in your selenium webdriver. In this information, we'll explore tips on how to scrape the webpage with the assistance of Selenium Webdriver and BeautifulSoup. This information will show with an example script that will scrape authors and courses from pluralsight.com with a given keyword. Browse different questions tagged javascript python selenium beautifulsoup or ask your personal query. Using Selenium means fetching all the resources that may usually be fetched when you visit a page in a browser - stylesheets, scripts, photographs, and so on. Set up a graphical workflow, simulate human interaction using your browser of choice, and replay as usually as you would like — without writing a single line of code. After importing parsel inside your ipython terminal, enter "driver.page_source" to load the complete supply code of the Google search webpage, which appears like something from the Matrix. Download ChromeDriver, which is a separate executable that WebDriver makes use of to control Chrome. Also you will want to have a Google Chrome browser application for this to work. In this tutorial, we are going to use a easy Ruby file however you can additionally create a Rails app that would scrape a website and save the information to the database.

Because of this, many libraries and frameworks exist to assist within the improvement of tasks, and there is a large group of builders who at present construct Python bots. This makes recruitment of developers easier and in addition means that assist is simpler to get when needed from websites similar to Stack Overflow. Besides its popularity, Python has a comparatively easy studying curve, flexibility to accomplish a wide variety of tasks simply, and a transparent coding type. Some web scraping tasks are better suited toward using a full browser to render pages. This could imply launching a full web browser in the same means a regular person might launch one; web pages which are loaded on visible on a display. There are a number of choice parameters you'll be able to set in your selenium webdriver. In this information, we'll explore tips on how to scrape the webpage with the assistance of Selenium Webdriver and BeautifulSoup. This information will show with an example script that will scrape authors and courses from pluralsight.com with a given keyword. Browse different questions tagged javascript python selenium beautifulsoup or ask your personal query. Using Selenium means fetching all the resources that may usually be fetched when you visit a page in a browser - stylesheets, scripts, photographs, and so on. Set up a graphical workflow, simulate human interaction using your browser of choice, and replay as usually as you would like — without writing a single line of code. After importing parsel inside your ipython terminal, enter "driver.page_source" to load the complete supply code of the Google search webpage, which appears like something from the Matrix. Download ChromeDriver, which is a separate executable that WebDriver makes use of to control Chrome. Also you will want to have a Google Chrome browser application for this to work. In this tutorial, we are going to use a easy Ruby file however you can additionally create a Rails app that would scrape a website and save the information to the database.

Client dinner with some refreshing saffron lemonade with a few drops of JustCBD ???? ???? Oil Tincture! @JustCbd https://t.co/OmwwXXoFW2#cbd #food #foodie #hemp #drinks #dinner #finedining #cbdoil #restaurant #cuisine #foodblogger pic.twitter.com/Kq0XeG03IO

— Creative Bear Tech (@CreativeBearTec) January 29, 2020

If you've a programming background, choosing up the talents ought to come naturally. Even if you don't have programming experience, you can rapidly be taught enough to get started. A beginner should not have any downside scraping information that's visible on a single, well-structured HTML web page. A website with heavy AJAX and complex authentication and anti-bot know-how would be very difficult to scrape. Automated internet scraping provides quite a few advantages over guide collection. From what you'll read, you'll know which of the device to make use of relying on your skill and individual project requirements. If you aren't really conversant with Web Scraping, I will advise you to learn our article on guide to web scraping – additionally take a look at our tutorial on the way to construct a easy internet scraper using Python. When scraping is finished through selenium, then it provides a number of browser support. Suppose the day dealer wants to entry the information from the web site every day. Each time the day dealer presses the clicking the button, it should auto pull the market knowledge into excel.

If you've a programming background, choosing up the talents ought to come naturally. Even if you don't have programming experience, you can rapidly be taught enough to get started. A beginner should not have any downside scraping information that's visible on a single, well-structured HTML web page. A website with heavy AJAX and complex authentication and anti-bot know-how would be very difficult to scrape. Automated internet scraping provides quite a few advantages over guide collection. From what you'll read, you'll know which of the device to make use of relying on your skill and individual project requirements. If you aren't really conversant with Web Scraping, I will advise you to learn our article on guide to web scraping – additionally take a look at our tutorial on the way to construct a easy internet scraper using Python. When scraping is finished through selenium, then it provides a number of browser support. Suppose the day dealer wants to entry the information from the web site every day. Each time the day dealer presses the clicking the button, it should auto pull the market knowledge into excel.

Food And Beverage Industry Email Listhttps://t.co/8wDcegilTq pic.twitter.com/19oewJtXrn

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

- This guide will clarify the process of constructing an internet scraping program that will scrape data and download recordsdata from Google Shopping Insights.

- Web scraping with Python and Beautiful Soup is a superb software to have within your skillset.

- Use web scraping when the data you have to work with is available to the general public, but not necessarily conveniently available.

- In contrast, when a spider constructed using Selenium visits a page, it will first execute all the JavaScript out there on the page before making it out there for the parser to parse the info.

A tradeoff is that they do not behave precisely like full, graphical browsers. For example, a full, graphical Chrome browser can load extensions while a headless Chrome browser can not (supply). Manual web scraping the process of manually copying and pasting information from web sites into spreadsheets. Commonly, guide web scraping naturally arises out of a enterprise need. It could start within the type of occasional copying and pasting of knowledge by business analysts however eventually may become a formalized business process. Earlier, I mentioned that it's helpful to click on the elements that you simply need to scrape. If carried out, you will now have the ability to leverage one other part of the IDE to determine numerous paths to locate the link or text you need. The WebDriver accepts commands by way of programming code (in a variety of languages) and launches this code in your default internet browser. Once the browser is launched, WebDriver will automate the commands, based on the scripted code, and simulate all attainable user interactions with the page, including scrolling, clicking, and typing.

As I typically use Chrome, and it’s constructed on the open-source Chromium project (also utilized by Edge, Opera, and Amazon Silk browsers), I figured I would attempt that first. the subsequent statement is a conditional that's true only when the script is run immediately. This prevents the following statements to run when this file is imported. it initializes the motive force and calls the lookup operate to look for “Selenium”. To begin extracting the information from the webpages, we'll take advantage of the aforementioned patterns in the net pages underlying code. This usually requires “best guess navigation” to find the particular information you're in search of. I needed to make use of the public knowledge provided for the schools within Kansas in a research project. Large scale applications may require extra superior methods leveraging languages similar to Scala and extra advanced hardware architectures. Generally, it's carried out as a way more environment friendly different to manually amassing information because it permits much more knowledge to be collected at a decrease price and in a shorter amount of time. Once information is extracted from an internet site, it's typically stored in a structured format, such as an Excel file or CSV spreadsheet, or loaded right into a database. This "net scraped information" can then be cleaned, parsed, aggregated, and remodeled into a format appropriate for its end-user, whether or not a person or software. Now, as a caveat, it doesn't mean that every website must be scraped. Some have legitimate restrictions in place, and there have been numerous court docket circumstances deciding the legality of scraping sure websites. On the other hand, some sites welcome and encourage data to be retrieved from their website and in some cases provide an API to make issues easier. For this project, the rely was returned back to a calling utility. I use the nifty highlightElement perform to confirm graphically in the browser that this is what I think it is. the source code you’ve scraped for a web site doesn’t comprise all the data you see in your browser. You ought to see a message stating that the browser is controlled by an automatic software. Let's say that you simply wish to scrape a Single Page utility, and that you don't find an easy method to instantly name the underlying APIs, then Selenium may be what you need. The Selenium API uses the WebDriver protocol to manage an internet browser, like Chrome, Firefox or Safari. Social MediaSocial media presents an abundance of data that can be utilized for all kinds of purposes. The first problem in net scraping is knowing what is feasible and identifying what information to gather. This is the place an experienced net scraper has a big advantage over a novice one. Still, once the data has been recognized, many challenges stay. If the content material you're in search of is out there, you have to go no further. However, if the content is one thing just like the Disqus feedback iframe, you need dynamic scraping. Conveniently, Python additionally has sturdy support for information manipulation once the web data has been extracted. R is another good selection for small to medium scale publish-scraping data processing. Many programming languages can be utilized to construct internet scraping initiatives — similar to Python, Ruby, Go, R, Java, and Javascript — however we recommend using Python since its the most popular one for net scraping. Out of the box, Python comes with two built-in modules, urllib and urllib2, designed to deal with the HTTP requests. is a python library designed to simplify the method of making HTTP requests. This is highly useful for web scraping as a result of step one in any net scraping workflow is to ship an HTTP request to the website’s server to retrieve the info displayed on the goal web page. However, if you search “tips on how to build an online scraper in python,” you're going to get quite a few solutions for the easiest way to develop a python net scraping project. Browse other questions tagged selenium web-scraping webdriver phantomjs or ask your own question. Most generally, programmers write customized software program packages to crawl specific websites in a pre-determined style and extract data for several specified fields. I was fighting my personal web scraping Python based project due to I-frames and JavaScript stuff while utilizing Beautiful Soup. Now the Excel file is able to interact with the web explorer. Next steps would be to include a macro script that might facilitate data scraping in HTML. There are sure prerequisites that needs to be performed on the excel macro file earlier than stepping into the method of data scraping in excel. Selenium can be categorized as the automation device that facilitates scraping of data from the HTML internet pages to perform net scraping using google chrome. Second, it also eliminates the potential for human error and might perform complex information validation to further ensure accuracy. Finally, in some instances, automated web scraping can capture Best Google Maps Data Scraping software knowledge from internet pages that's invisible to regular customers. Many kinds of software and programming languages can be utilized to execute these publish-scraping duties. First and foremost, an automated process can collect information rather more efficiently. Much extra knowledge can be collected in a a lot shorter time in comparison with guide processes.

Women's Clothing and Apparel Email Lists and Mailing Listshttps://t.co/IsftGMEFwv

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

women's dresses, shoes, accessories, nightwear, fashion designers, hats, swimwear, hosiery, tops, activewear, jackets pic.twitter.com/UKbsMKfktM

Many jobs require web scraping abilities, and many individuals are employed as full-time net scrapers. In the former case, programmers or analysis analysts with separate main obligations turn into responsible for a set of internet scraping duties. With the WebDriverWait technique you will wait the precise period of time necessary on your factor / knowledge to be loaded. This will launch Chrome in headfull mode (like an everyday Chrome, which is controlled by your Python code). How to navigate the code, choose higher component tags, and collect your data. Click the button to view full particulars of a remark or person profile to use scraping. You can see from the example above that Beautiful Soup will retrieve a JavaScript hyperlink for each job title at the state agency. Now in the code block of the for / in loop, Selenium will click every JavaScript hyperlink. Yet, like many government websites, it buries the info in drill-down hyperlinks and tables.  Companies who choose this feature have identified a necessity for net scraped data but generally wouldn't have the technical experience or infrastructure to automatically gather it. If the reply to both of these questions is "Yes," then your small business may be a good candidate to implement an online scraping strategy. Web scraping may help your corporation make higher-knowledgeable choices, attain targeted leads, or observe your rivals. Consultation with an skilled web scraper can help you uncover what is feasible. Excel presents the bottom learning curve but is limited in its capabilities and scalability. R and Python are open-source programming languages that require programming skills however are almost limitless of their ability to govern knowledge. This considerably will increase the velocity and efficiency of an online scraping spider. Within minutes of installing the framework, you can have a totally functioning spider scraping the web. Out of the field, Scrapy spiders are designed to download HTML, parse and course of the info and reserve it in both CSV, JSON or XML file formats. One of the big advantages of utilizing BeautifulSoup is its simplicity and ability to automate a number of the recurring elements of parsing information during internet scraping. Using the Requests library is good for the first part of the web scraping process (retrieving the online web page knowledge). It has been constructed to devour less memory and use CPU sources minimally. In truth, some benchmarks have stated that Scrapy is 20 occasions quicker than the other tools in scraping. This article shall be used to discuss the 3 well-liked instruments and supply an entire explanation about them. This publish will cowl major tools and strategies for net scraping in Ruby. We begin with an introduction to constructing an online scraper using widespread Ruby HTTP purchasers and parsing the response. This approach to web scraping has, nevertheless, its limitations and may come with a good dose of frustration. Not to say, as manageable as it is to scrape static pages, these tools fail when it comes to dealing with Single Page Applications, the content material of which is constructed with JavaScript. As an answer to that, we are going to propose using a whole internet scraping framework. The problem is you are either ready for too lengthy, or not sufficient. Also the web site can load sluggish in your local wifi internet connexion, but shall be 10 instances quicker on your cloud server. Since I wish to seize all the funds at once, I tell Selenium to select the entire desk. Going a few ranges up from the individual cell within the table I’ve selected, I see that is the HTML tag that incorporates the whole table, so I tell Selenium to find this element. When you load the leaf of the above sub_category_links dictionary, you'll encounter the next pages with ‘Show More’ button as shown in the under image. Selenium shines at tasks like this where we are able to really click on the button utilizing factor.click on() methodology. Through this python API, we are able to access all the functionalities of selenium web dirvers like Firefox, IE, Chrome, etc. We can use the next command for putting in the selenium python API. Scraping the information with Python and saving it as JSON was what I needed to do to get began. Using the Python programming language, it's potential to “scrape” information from the net in a fast and environment friendly manner.

Companies who choose this feature have identified a necessity for net scraped data but generally wouldn't have the technical experience or infrastructure to automatically gather it. If the reply to both of these questions is "Yes," then your small business may be a good candidate to implement an online scraping strategy. Web scraping may help your corporation make higher-knowledgeable choices, attain targeted leads, or observe your rivals. Consultation with an skilled web scraper can help you uncover what is feasible. Excel presents the bottom learning curve but is limited in its capabilities and scalability. R and Python are open-source programming languages that require programming skills however are almost limitless of their ability to govern knowledge. This considerably will increase the velocity and efficiency of an online scraping spider. Within minutes of installing the framework, you can have a totally functioning spider scraping the web. Out of the field, Scrapy spiders are designed to download HTML, parse and course of the info and reserve it in both CSV, JSON or XML file formats. One of the big advantages of utilizing BeautifulSoup is its simplicity and ability to automate a number of the recurring elements of parsing information during internet scraping. Using the Requests library is good for the first part of the web scraping process (retrieving the online web page knowledge). It has been constructed to devour less memory and use CPU sources minimally. In truth, some benchmarks have stated that Scrapy is 20 occasions quicker than the other tools in scraping. This article shall be used to discuss the 3 well-liked instruments and supply an entire explanation about them. This publish will cowl major tools and strategies for net scraping in Ruby. We begin with an introduction to constructing an online scraper using widespread Ruby HTTP purchasers and parsing the response. This approach to web scraping has, nevertheless, its limitations and may come with a good dose of frustration. Not to say, as manageable as it is to scrape static pages, these tools fail when it comes to dealing with Single Page Applications, the content material of which is constructed with JavaScript. As an answer to that, we are going to propose using a whole internet scraping framework. The problem is you are either ready for too lengthy, or not sufficient. Also the web site can load sluggish in your local wifi internet connexion, but shall be 10 instances quicker on your cloud server. Since I wish to seize all the funds at once, I tell Selenium to select the entire desk. Going a few ranges up from the individual cell within the table I’ve selected, I see that is the HTML tag that incorporates the whole table, so I tell Selenium to find this element. When you load the leaf of the above sub_category_links dictionary, you'll encounter the next pages with ‘Show More’ button as shown in the under image. Selenium shines at tasks like this where we are able to really click on the button utilizing factor.click on() methodology. Through this python API, we are able to access all the functionalities of selenium web dirvers like Firefox, IE, Chrome, etc. We can use the next command for putting in the selenium python API. Scraping the information with Python and saving it as JSON was what I needed to do to get began. Using the Python programming language, it's potential to “scrape” information from the net in a fast and environment friendly manner.